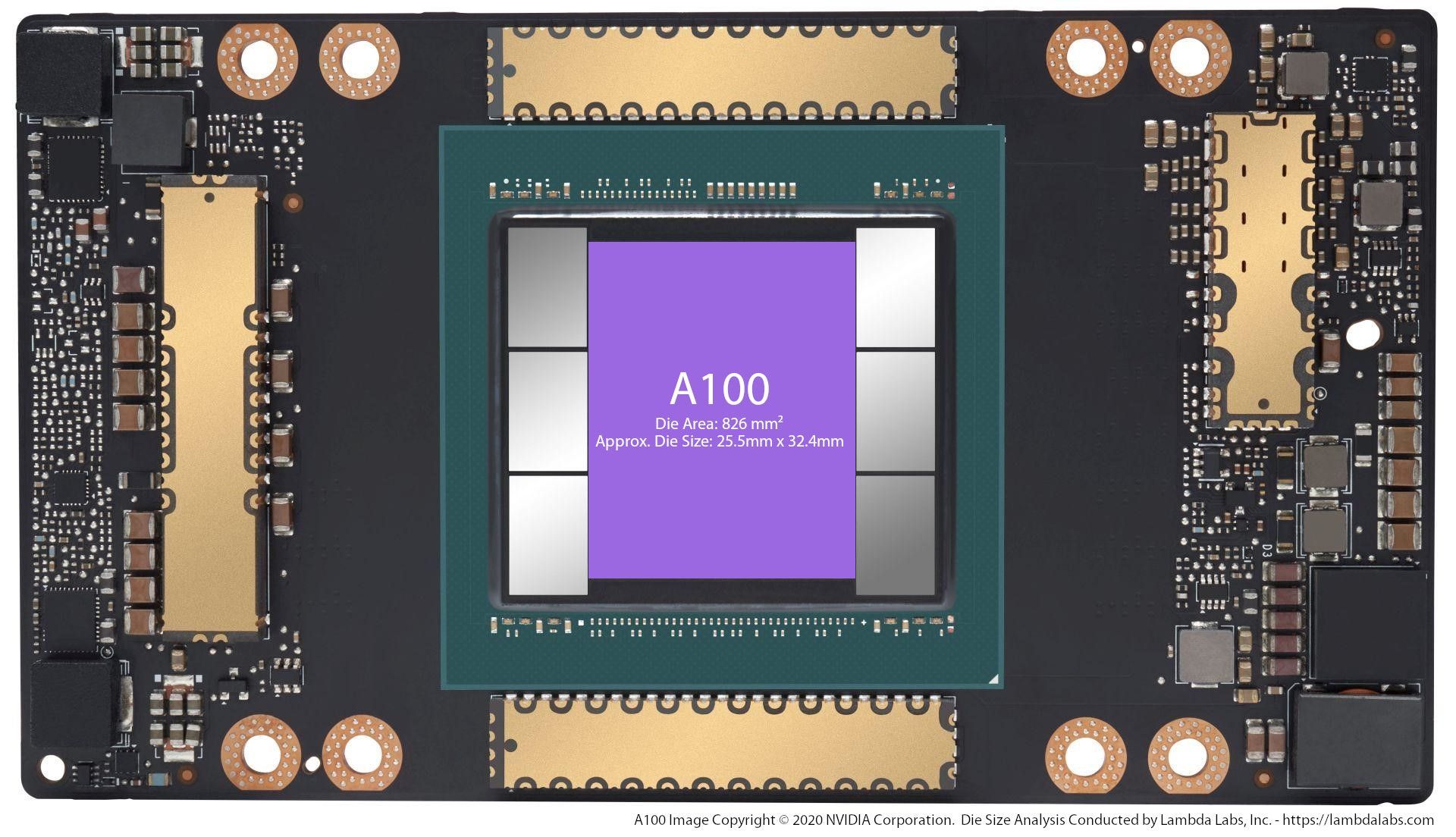

NVIDIA A100 GPU Benchmarks for Deep Learning

Lambda customers are starting to ask about the new NVIDIA A100 GPU and our Hyperplane A100 server. The A100 will likely see the large gains on models like ...

Published on by Stephen Balaban

Lambda customers are starting to ask about the new NVIDIA A100 GPU and our Hyperplane A100 server. The A100 will likely see the large gains on models like ...

Published on by Michael Balaban

State-of-the-art (SOTA) deep learning models have massive memory footprints. Many GPUs don't have enough VRAM to train them. In this post, we determine which ...

Published on by Michael Balaban

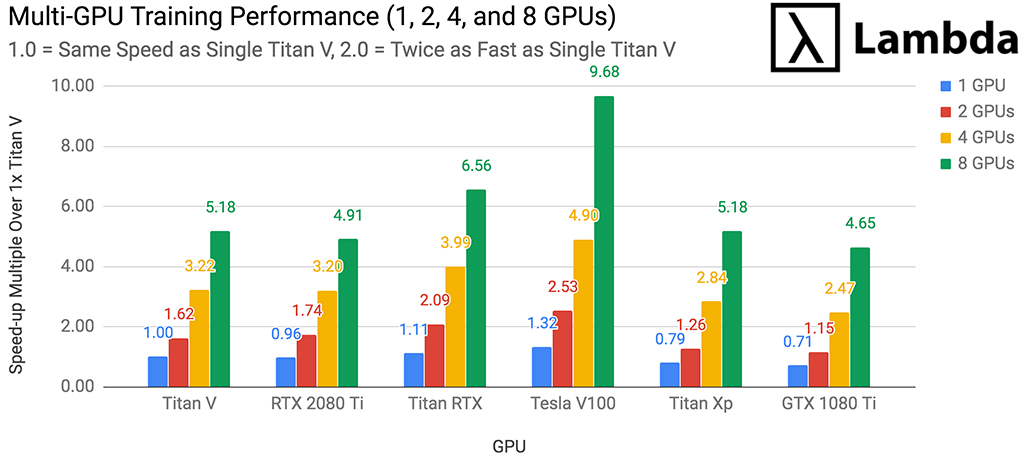

In this post, Lambda Labs benchmarks the Titan V's Deep Learning / Machine Learning performance and compares it to other commonly used GPUs. We use the Titan V ...

Published on by Stephen Balaban

by Chuan Li, PhD

Published on by Stephen Balaban

CPU, GPU, and I/O utilization monitoring using tmux, htop, iotop, and nvidia-smi. This stress test is running on a Lambda GPU Cloud 4x GPU instance. Often ...

Published on by Chuan Li

Deep Learning requires GPUs, which are very expensive to rent in the cloud. In this post, we compare the cost of buying vs. renting a cloud GPU server. We use ...

Published on by Chuan Li

DAWNBench recently updated its leaderboard. Among the impressive entries from top-class research institutes and AI Startups, perhaps the biggest leap was ...

Published on by Michael Balaban

Titan RTX vs. 2080 Ti vs. 1080 Ti vs. Titan Xp vs. Titan V vs. Tesla V100. For this post, Lambda engineers benchmarked the Titan RTX's deep learning ...

Published on by Stephen Balaban

We open sourced the benchmarking code we use at Lambda Labs so that anybody can reproduce the benchmarks that we publish or run their own. We encourage people ...

Published on by Stephen Balaban

At Lambda, we're often asked "what's the best GPU for deep learning?" In this post and accompanying white paper, we evaluate the NVIDIA RTX 2080 Ti, RTX 2080, ...