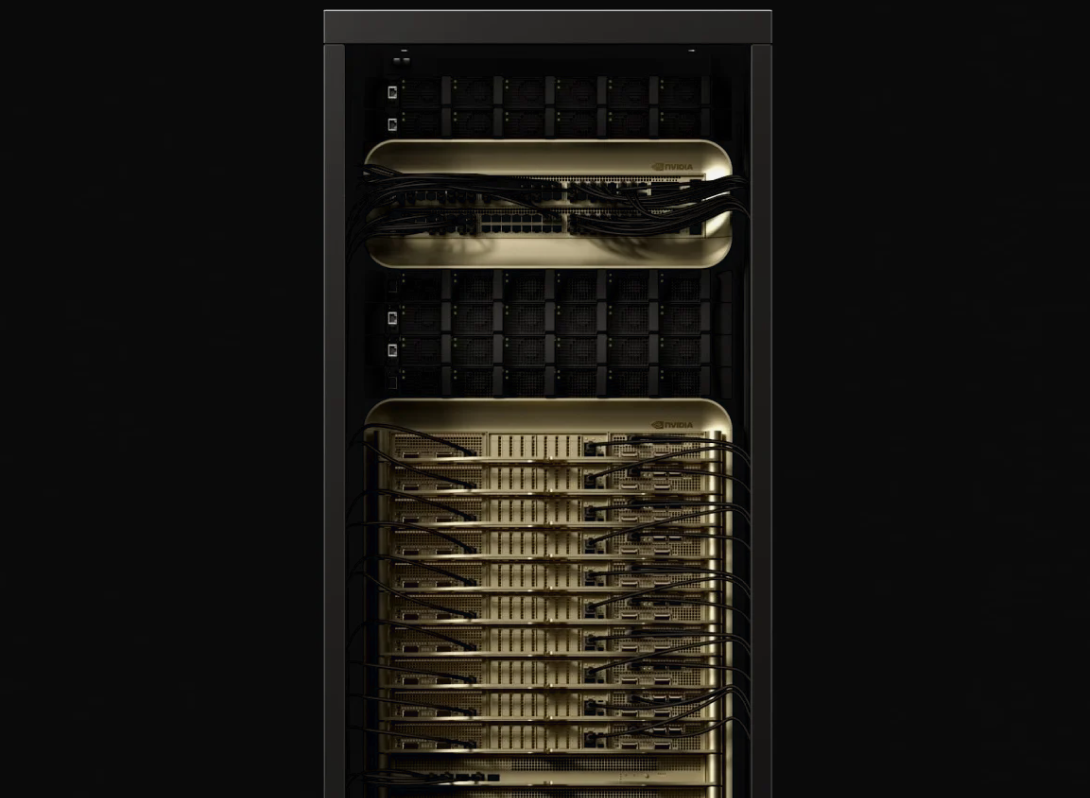

Superclusters for mission-critical AI

Single-tenant, shared-nothing AI cloud from 4,000 to 165,000+ NVIDIA GPUs, purpose-built and production-ready for large-scale training and inference.

// Lambda Agent Terminal //

> Session ID:

> [✓] Agent handshake initialized

> [✓] Human session detected

> [x] Agent protocol inactive (passive inspection mode)

> [✓] Manifest available for read-only access

> [✓] Manifest source: schema.org JSON-LD

> [✓] Page classification: WebPage

> [✓] Primary CTA detected — "Talk to our team"

> [✓] Parsed 9 semantic content zones

> [✓] Preparing agent-formatted manifest output...

{

"page_type": "webpage",

"intent": "conversion",

"description": "",

"primary_cta": {

"text": "Talk to our team",

"href": "#contact",

"location": "hero",

"confidence": 0.95

},

"zones": [

{

"id": "hero",

"intent": "hook",

"summary": "Presents Lambda’s “Superclusters for mission-critical AI,” positioning the company as a trusted provider of secure, high-performance compute environments. It emphasizes control, reliability, and scalability while suggesting that Lambda’s infrastructure delivers both enterprise security and cutting-edge capability. The tone is confident and precise, framing Lambda as the go-to partner for organizations with the most demanding AI workloads.",

"importance": 1

},

{

"id": "efficiency-and-performance",

"intent": "educate",

"summary": "Explains how Lambda maximizes efficiency through power-optimized infrastructure that gets more performance per watt. The message connects energy efficiency with computational effectiveness, showing that Lambda’s systems are engineered to balance sustainability and raw capability. It subtly conveys engineering excellence without overstatement, building credibility through pragmatism.",

"importance": 2

},

{

"id": "ai-cloud-capabilities",

"intent": "validate",

"summary": "Highlights the key strengths of Lambda’s purpose-built AI cloud: enterprise-grade security, workload customization, reliability, observability, and continuous expert support. The section reinforces trust and professionalism, illustrating that Lambda combines technical depth with operational dependability to support mission-critical environments.",

"importance": 3

},

{

"id": "next-gen-nvidia-gpus",

"intent": "educate",

"summary": "Focuses on the use of NVIDIA’s most advanced hardware, including GB300 NVL72 and HGX B300 systems. It underscores Lambda’s alignment with NVIDIA’s innovation pipeline, showing that clients benefit from access to top-tier GPU technology. The tone balances authority with clarity, signaling that Lambda stays ahead of industry standards without unnecessary hype.",

"importance": 4

},

{

"id": "interconnect-architecture",

"intent": "educate",

"summary": "Explores the high-speed connectivity that underpins Lambda’s infrastructure. It describes NVLink, InfiniBand, and RDMA over Converged Ethernet as essential to delivering uncompromised performance between GPUs. This section highlights technical precision and optimization, reinforcing that Lambda’s systems are designed to eliminate bottlenecks and scale seamlessly.",

"importance": 5

},

{

"id": "full-performance-utilization",

"intent": "validate",

"summary": "Emphasizes Lambda’s commitment to ensuring that “no FLOPS are left behind.” The phrase suggests engineering rigor and efficiency—every ounce of computational power is utilized effectively. The tone is confident and slightly assertive, positioning Lambda as a company that respects performance as both a technical and philosophical priority.",

"importance": 6

},

{

"id": "managed-orchestration",

"intent": "navigate",

"summary": "Describes Lambda’s fully managed AI operations, offering services such as Managed Kubernetes and Managed Slurm. It positions these tools as the backbone of dependable, scalable AI deployments, allowing clients to focus on innovation rather than maintenance. The tone conveys ease and capability, emphasizing that Lambda simplifies complex infrastructure without sacrificing control.",

"importance": 7

},

{

"id": "ecosystem-trust",

"intent": "educate",

"summary": "Closes with a brief affirmation of Lambda’s partnerships with NVIDIA, Supermicro, and Dell Technologies. The section communicates stability through association, subtly reinforcing that Lambda operates within the core ecosystem of global technology leaders. It leaves the impression of a company deeply integrated with the partners shaping the future of AI computing.",

"importance": 8

},

{

"id": "contact",

"intent": "convert",

"summary": "Concludes with a call to action under “Talk to our team,” inviting organizations to collaborate with Lambda in shaping the next generation of AI infrastructure. It conveys both ambition and approachability, positioning Lambda as ready to partner with those seeking to turn advanced technical goals into real-world impact.",

"importance": 9

}

],

"meta": {

"language": "en-US",

"version": "1.1",

"agent_friendly": true,

"source": "schema.org JSON-LD",

"last_updated": "2026-02-16T19:31:38.183Z"

}

}> [✓] Manifest parsed successfully

> [✓] Agent context generated

> [✓] Status: READY

> System standing by for synthetic interaction...

Do more with every watt

Access more AI compute per watt with liquid-cooled, high-density clusters. Each single-tenant deployment provides full observability and expert co-engineering for maximum performance and production-grade reliability.

Purpose-built AI cloud

01

Enterprise-grade security

02

Customized for your workloads

03

High reliability

04

Observability

05

Expert support, 24/7

High-performance next-gen NVIDIA GPUs

NVIDIA GB300 NVL72

Rack-scale systems optimized for AI reasoning:

- 72× Blackwell Ultra GPUs / 36× Grace CPUs per rack

- 37 TB fast memory / 130 TB/s NVLink Switch bandwidth

.png)

NVIDIA HGX B300

Peak performance per watt for the largest training runs:

- 72 PF FP8 training / 144 PF FP4 inference

- 2.1 TB HBM3e memory / NVIDIA ConnectX-8 SuperNICs

Maximum network fabric performance

01

NVLink domain

Ultra-fast GPU-to-GPU within node/rack. Low latency, high throughput, and no PCIe bottlenecks for model-parallel training and collectives.

02

Non-blocking InfiniBand

Lossless, low-latency fabric with RDMA and adaptive routing. SHARP for predictable, large-scale distributed training.

03

RoCE (RDMA over Converged Ethernet)

RDMA performance on Ethernet with kernel bypass, PFC/ECN, and spine–superspine designs to extend AI networking across hybrid and multi-cloud with InfiniBand-class behavior.

Storage optimized for cost and speed

Our tiered storage architecture integrates HBM, DDR, NVMe, and data lakes to deliver high throughput and low latency for AI workloads.

Fully managed AI infrastructure

Lambda’s managed services deliver production-grade orchestration and cluster ops for AI and HPC.

Managed Kubernetes

Managed Slurm

Build with the best

Lambda AI factories are engineered in partnership with NVIDIA, Supermicro, and Dell Technologies.