Perform GPU, CPU, and I/O stress testing on Linux

CPU, GPU, and I/O utilization monitoring using tmux, htop, iotop, and nvidia-smi. This stress test is running on a Lambda GPU Cloud 4x GPU instance. Often ...

Published on by Stephen Balaban

CPU, GPU, and I/O utilization monitoring using tmux, htop, iotop, and nvidia-smi. This stress test is running on a Lambda GPU Cloud 4x GPU instance. Often ...

Published on by Stephen Balaban

Update June 5th 2020: OpenAI has announced a successor to GPT-2 in a newly published paper. Checkout our GPT-3 model overview.

Published on by Stephen Balaban

You were probably thinking that this was going to be a long post. You're in luck. All you need to do is to install Ubuntu 18.04 and then Lambda Stack. Here's ...

Published on by Stephen Balaban

Or, how Lambda Stack + Lambda Stack Dockerfiles = GPU accelerated deep learning containers

Published on by Stephen Balaban

These slides are from my talk at Rework Deep Learning Summit 2019.

Published on by Stephen Balaban

Lambda is a diamond sponsor this year at NeurIPS. (The conference recently changed its name from NIPS to NeurIPS.) We have three extra registrations for the ...

Published on by Stephen Balaban

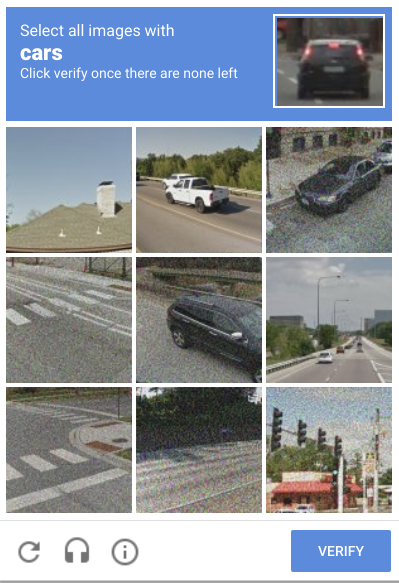

I'm not a human. Or at least that's what Google thought when I failed to fill out the reCAPTCHA properly for the third time. What exactly qualifies as a ...

Published on by Stephen Balaban

Many deep learning teams have software that depends on Ubuntu 16.04 (and not 18.04). However, the installation process for 16.04 has some quirks with the ...

Published on by Stephen Balaban

We open sourced the benchmarking code we use at Lambda Labs so that anybody can reproduce the benchmarks that we publish or run their own. We encourage people ...

Published on by Stephen Balaban

At Lambda, we're often asked "what's the best GPU for deep learning?" In this post and accompanying white paper, we evaluate the NVIDIA RTX 2080 Ti, RTX 2080, ...