The Essential Guide to GPUs for AI, Training and Inference

Introduction Graphics Processing Units (GPUs) were originally designed to handle computer graphics, like making video games look realistic or helping Netflix ...

Published on by Jessica Nicholson

Introduction Graphics Processing Units (GPUs) were originally designed to handle computer graphics, like making video games look realistic or helping Netflix ...

Published on by Anket Sah

Lambda’s 1-Click Clusters(1CC) provide AI teams with streamlined access to scalable, multi-node GPU clusters, cutting through the complexity of distributed ...

Published on by dstack

Lambda + dstack: Empowering your ML team with rock-solid infrastructure for distributed reasoning agent training

Published on by Anket Sah

AI doesn’t wait and neither should real-time insights into your infrastructure!

Published on by Anket Sah

Introducing Managed Slurm (Early Preview) on Lambda: Your AI Cluster’s New Best Friend Think of Slurm as the air‑traffic controller for your GPU fleet that ...

Published on by Thomas Bordes

Blackwell is coming… so is ARM computing 2025 is just around the corner, and with it comes the highly anticipated launch of NVIDIA's revolutionary Blackwell ...

Published on by Mitesh Agrawal

Opening up options: higher-end GPUs in smaller chunks We're excited to announce the launch of new 1x, 2x, and 4x NVIDIA H100 SXM Tensor Core GPU instances in ...

Published on by Robert Brooks IV

A Golden Ticket to an extraordinary prize We’re excited to introduce Lambda’s Golden Ticket prize draw, offering you and your team the chance to win full-time ...

Published on by Mitesh Agrawal

Try Hermes 3 for free with the New Lambda Chat Completions API and Lambda Chat. Introducing Hermes 3: A new era for Llama fine-tuning We are thrilled to ...

Published on by Mitesh Agrawal

Introducing Lambda 1-Click Clusters: 16 to 512 interconnected NVIDIA H100 Tensor Core GPUs. Available on-demand. No long-term contracts required. Spinning up a ...

Published on by Chuan Li

This blog explores the synergy of DeepSpeed’s ZeRO-Inference, a technology designed to make large AI model inference more accessible and cost-effective, with ...

Published on by Kathy Bui

Persistent storage for Lambda Cloud recently exited beta and became available in the majority of regions. We are excited to announce that filesystems are now ...

.png)

Published on by Chuan Li

In this blog, Lambda showcases the capabilities of NVIDIA’s Transformer Engine, a cutting-edge library that accelerates the performance of transformer models ...

Published on by Chuan Li

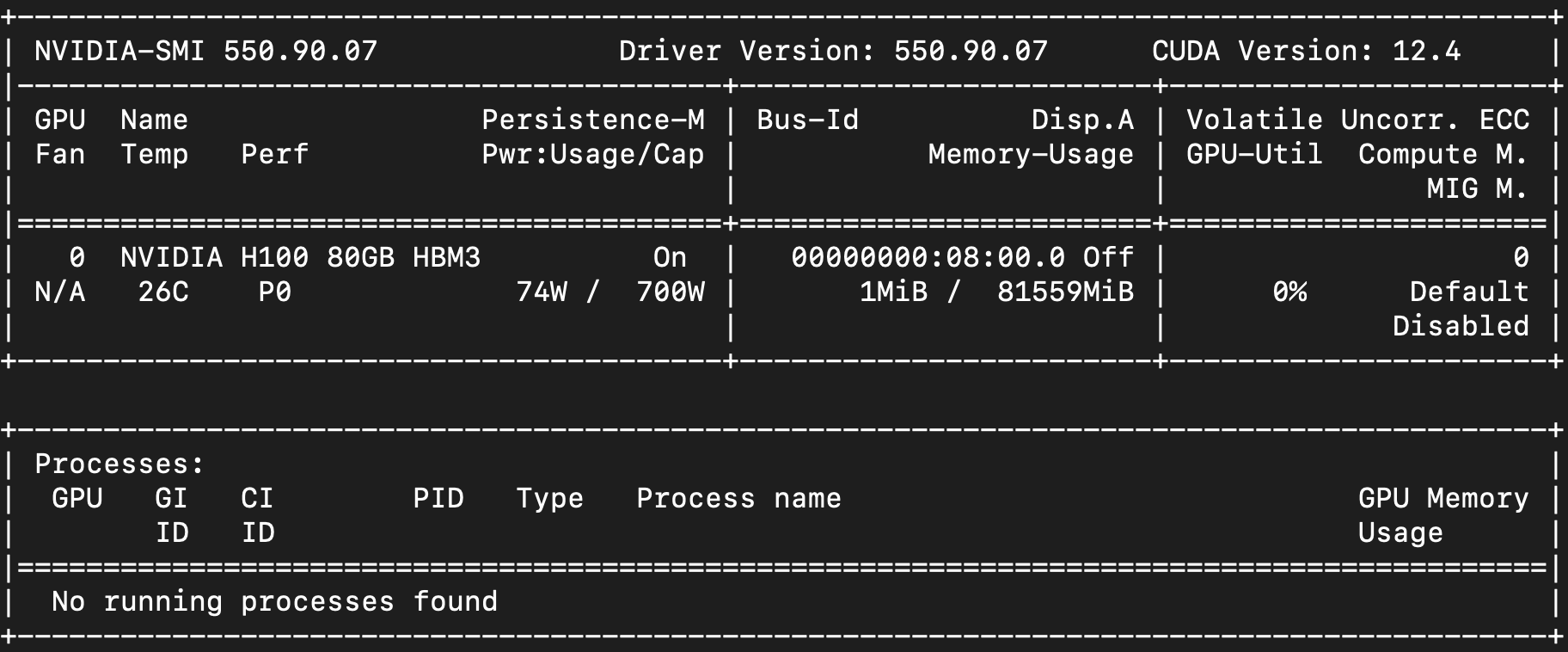

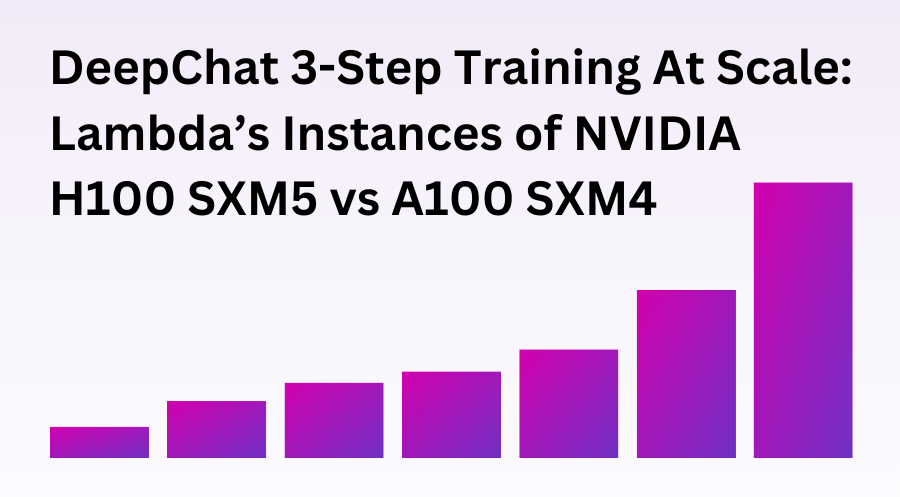

GPU benchmarks on Lambda’s offering of the NVIDIA H100 SXM5 vs the NVIDIA A100 SXM4 using DeepChat’s 3-step training example.

.png)

Published on by Maxx Garrison

Lambda has launched a new Hyperplane server with NVIDIA H100 GPUs and AMD EPYC 9004 series CPUs. The new AI server combines the fastest GPU type on the market, ...