Shaping the future of AI development

Compute, community, and cutting-edge research for AI developers defining what's next

Pushing the frontier of AI/ML research

Neural Software: from vision to reality

Join Lambda's co-founder and CEO Stephen Balaban as he unveils Neural Software: an entirely new way of thinking about software that can collaborate with humans, evolve over time, and adapt like never before.

Our work at NeurIPS 2025

01

Scaling laws for diffusion models

Diffusion beats autoregressive in data-constrained settings.

02

SpatialReasoner

03

Tensor decomposition for force-field prediction

04

Bifrost-1

05

BLEUBERI

06

OverLayBench

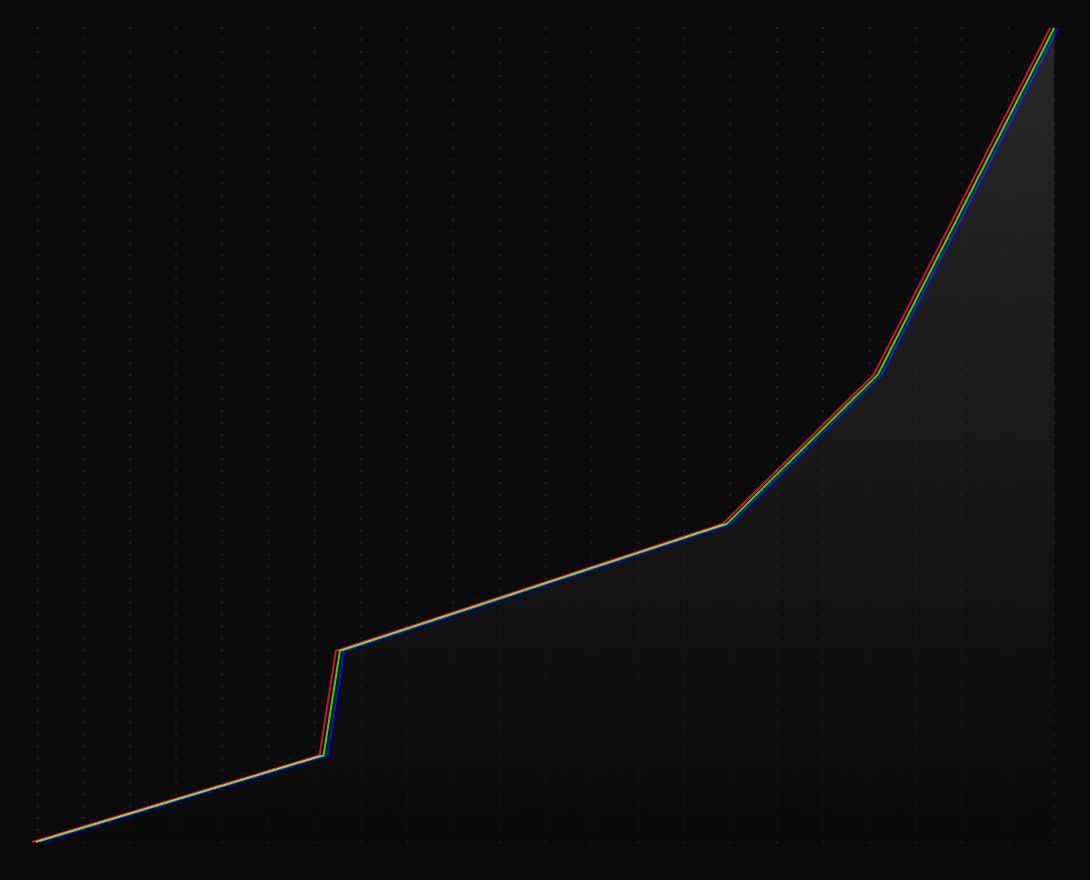

LLM performance benchmarks leaderboard

A clear, data-driven comparison of today's leading large language models. Standardized benchmark results cover top contenders like Meta's Llama 4 series, Alibaba's Qwen3, and the latest from DeepSeek, with critical performance metrics measuring everything from coding ability to general knowledge.

ML Times

Your go-to source for the latest in the field, curated by AI. Sift through the excess. Make every word count.

Best practices and system insights

01

Diffusion from scratch

02

Text2Video pretraining

03

GPU benchmarks

Throughput GPU benchmarks for training deep neural networks.

04

MLCommon benchmark

Recognized by scholars and industry peers

Latent thought models

Teach LLMs to “think” before they speak and improve parameter and memory efficiency.

Product of experts with LLMs

Boosting performance on the ARC-AGI challenge is a matter of perspective.

* Winner, ARC-AGI 2024

DepR

Uses depth cues and diffusion models to turn a single image into a clean, instance-level 3D scene.

Video MMLU

A benchmark that tests whether models can truly follow and understand long, multi-subject lecture videos, revealing that current VLM’s limitations.

Latent adaptive planner

Robots can learn a compact “plan space” from human demos and continually updates its plan as the scene changes, enabling faster and more reliable manipulation.

AimBot

AimBot draws visual cue onto robot camera images so policies directly see where the gripper is in 3D, boosting manipulation success.

Word salad chopper

Detects when a reasoning model has drifted into meaningless repetition and cleanly cuts away those extra tokens, saving a lot of output cost with almost no quality loss.

DEL-ToM for theory-of-mind reasoning

Breaks social reasoning into “who-knows-what” steps and uses a checker to pick the most consistent answer, improving small-models’ theory-of-mind performance.

VeriFastScore

Trains a single model to extract and verify all claims in a long answer at once using web evidence, speeding up long-form factuality evaluation.

Error typing for smarter rewards

Labeling each reasoning step’s mistake type and converts those labels into scores, giving richer feedback and improving math problem solving with less data.

Breakthroughs backed by Lambda

01

SAEBench

A comprehensive benchmark for sparse autoencoders in language model interpretability

Adam Karvonen, Can Rager, Johnny Lin, Curt Tigges, Joseph Bloom, David Chanin, Yeu-Tong Lau, Eoin Farrell, Callum McDougall, Kola Ayonrinde, Demian Till, Matthew Wearden, Arthur Conmy, Samuel Marks, and Neel Nanda — ICML 2025

02

VideoHallu

Evaluating and mitigating multi-modal hallucinations on synthetic video understanding

Zongxia Li, Xiyang Wu, Guangyao Shi, Yubin Qin, Hongyang Du, Tianyi Zhou, Dinesh Manocha, and Jordan Lee Boyd-Graber — NeurIPS 2025

03

VLM2Vec-V2

Advancing multimodal embedding for videos, images, and visual documents

Meng, Rui and Jiang, Ziyan and Liu, Ye and Su, Mingyi and Yang, Xinyi and Fu, Yuepeng and Qin, Can and Chen, Zeyuan and Xu, Ran and Xiong, Caiming, and others — arXiv preprint 2025

04

Think, prune, train, improve

Scaling reasoning without scaling models

Caia Costello, Simon Guo, Anna Goldie, and Azalia Mirhoseini — ICLR 2025 workshop

05

NeoBERT

A next-generation BERT

Lola Le Breton, Quentin Fournier, Mariam El Mezouar, and Sarath Chandar — TMLR 2025