ShadeRunner: Chrome plugin for enhanced on-page research

In today's digital era, accessing information efficiently is crucial. Our new Chrome plugin, ShadeRunner, aims to simplify this process by offering a range of ...

Published on by David Hartmann

In today's digital era, accessing information efficiently is crucial. Our new Chrome plugin, ShadeRunner, aims to simplify this process by offering a range of ...

Published on by Chuan Li

If you're interested in training the next large transformer like DALL-E, Imagen, or BERT, a single GPU (or even single 8x GPU instance!) might not be enough ...

Published on by Kathy Bui

After a period of closed beta, we're excited to announce that persistent storage for Lambda GPU Cloud is now available for all A6000 and V100 instances in an ...

Published on by Stephen Balaban

This curriculum provides an overview of free online resources for learning about deep learning. It includes courses, books, and even important people to follow.

Published on by Stephen Balaban

Published on by Remy Guercio

You can now use Lambda Cloud High Speed Filesystems to permanently store data on the Lambda Cloud. Click here to learn how to use Lambda Cloud Filesystems.

Published on by Remy Guercio

This guide will walk you through the process of launching a Lambda Cloud GPU instance and using SSH to log in. While we offer both a Web Terminal and Jupyter ...

Published on by Stephen Balaban

The desired end-state of this tutorial, a running subnet manager on your switch. This tutorial will walk you through the steps required to set up a Mellanox ...

Published on by Chuan Li

TensorFlow 2 is now live! This tutorial walks you through the process of building a simple CIFAR-10 image classifier using deep learning. In this tutorial, we ...

Published on by Chuan Li

This blog will walk you through the steps of setting up a Horovod + Keras environment for multi-GPU training.

Published on by Chuan Li

One of the most asked questions we get at Lambda Labs is, “how do I track resource utilization for deep learning jobs?” Resource utilization tracking can help ...

Published on by Chuan Li

Distributed training allows scaling up deep learning task so bigger models can be learned or training can be conducted at a faster pace. In a previous ...

Published on by Chuan Li

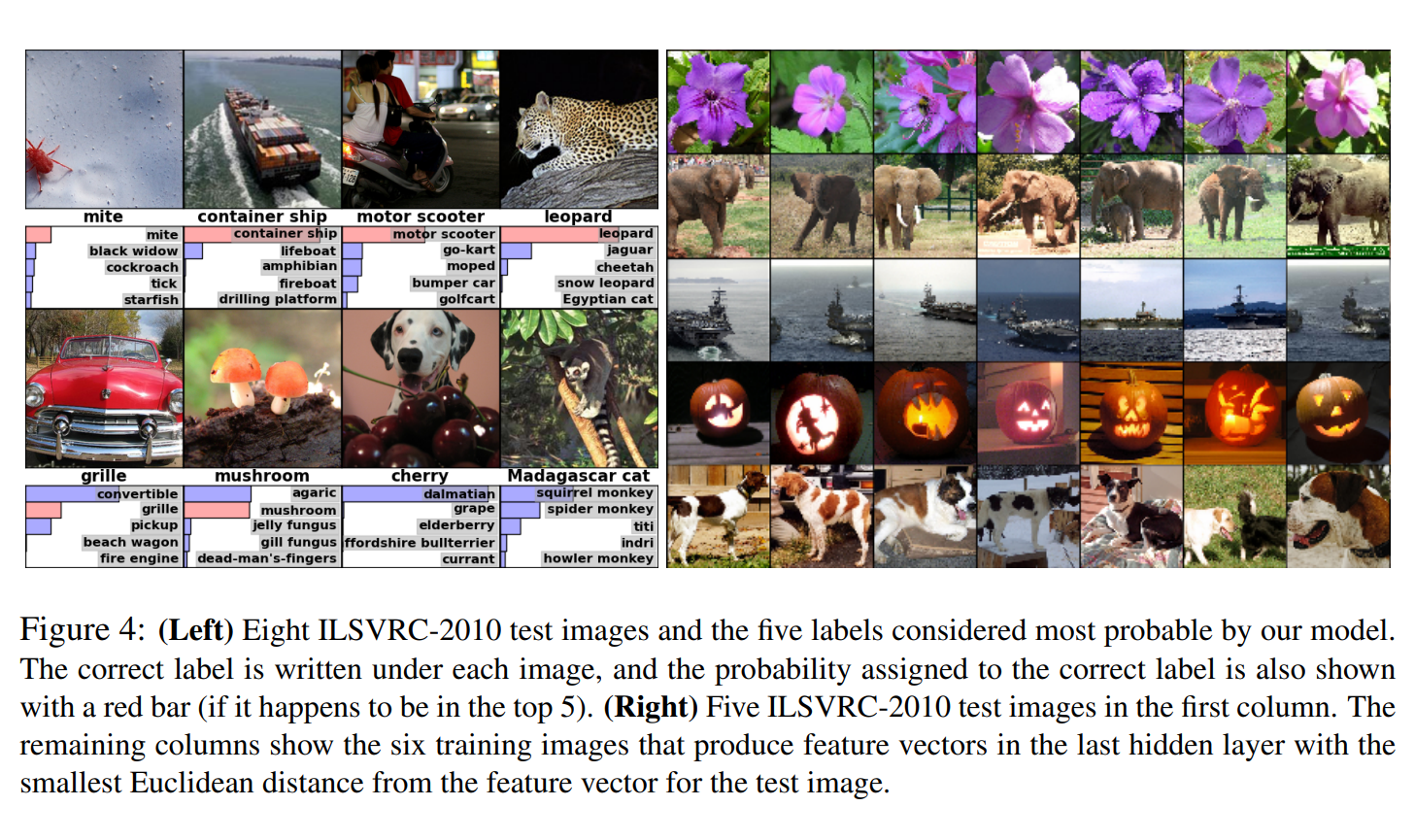

During training, weights in the neural networks are updated so that the model performs better on the training data. For a while, improvements on the training ...

Published on by Chuan Li

This tutorial combines two items from previous tutorials: saving models and callbacks. Checkpoints are saved model states that occur during training. With ...

Published on by Chuan Li

This tutorial shows you how to perform transfer learning using TensorFlow 2.0. We will cover: