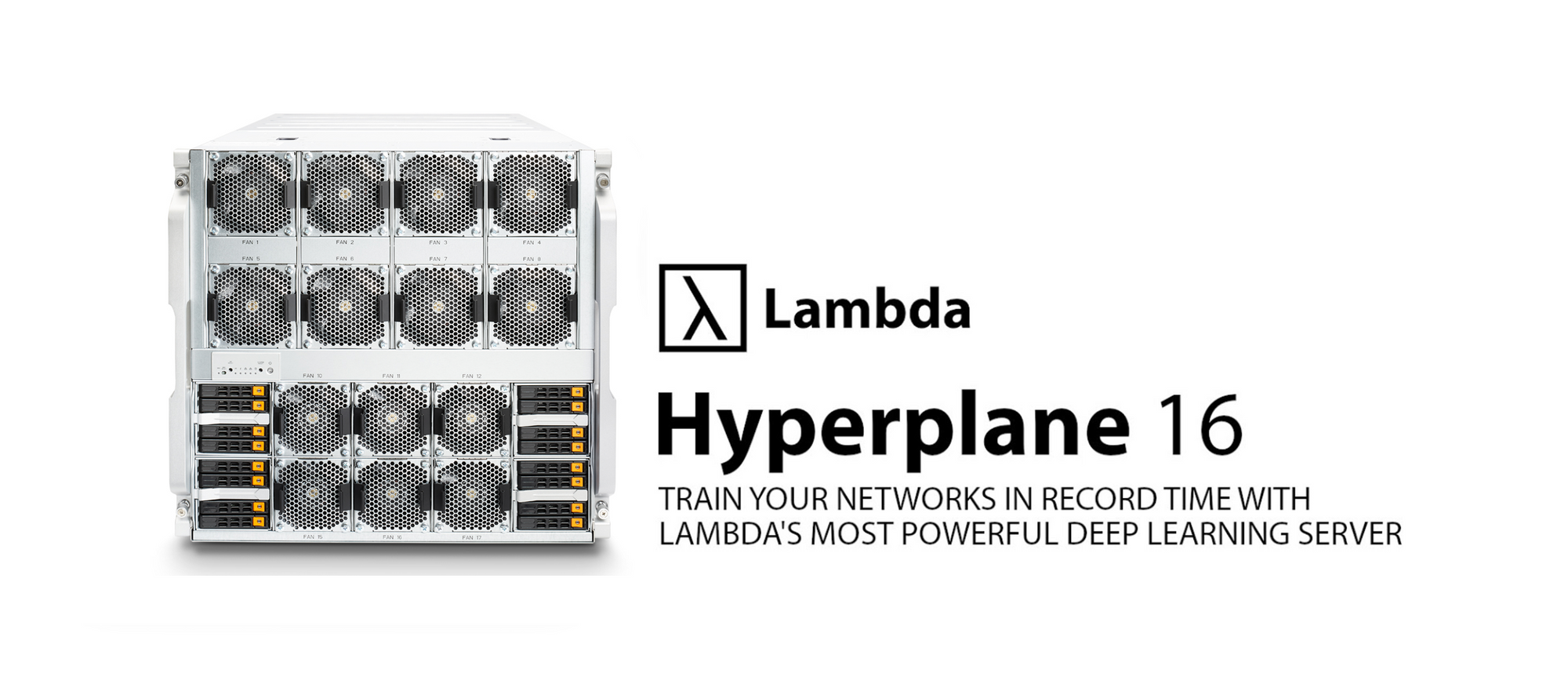

Training Neural Networks in Record Time with the Hyperplane-16

by Chuan Li, PhD

Lambda’s 1-Click Clusters(1CC) provide AI teams with streamlined access to scalable, multi-node GPU clusters, cutting through the complexity of distributed infrastructure. Now, we're pushing the envelope further by integrating NVIDIA's Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) into our multi-tenant 1CC environments. This technology reduces communication latency and improves bandwidth efficiency, directly accelerating training speed of distributed AI workloads.

Published on by Anket Sah

by Chuan Li, PhD

Published on by Chuan Li

The desired end-state of this tutorial, a running subnet manager on your switch. This tutorial will walk you through the steps required to set up a Mellanox ...

Published on by Stephen Balaban

TensorFlow 2 is now live! This tutorial walks you through the process of building a simple CIFAR-10 image classifier using deep learning. In this tutorial, we ...

Published on by Chuan Li

Create a cloud account instantly to spin up GPUs today or contact us to secure a long-term contract for thousands of GPUs