Voltron Data Case Study: Why ML teams are using Lambda Reserved Cloud Clusters

One of the biggest trends in machine learning is the development of large transformer models like BERT and diffusion models like stable diffusion. These large ...

Lambda’s 1-Click Clusters(1CC) provide AI teams with streamlined access to scalable, multi-node GPU clusters, cutting through the complexity of distributed infrastructure. Now, we're pushing the envelope further by integrating NVIDIA's Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) into our multi-tenant 1CC environments. This technology reduces communication latency and improves bandwidth efficiency, directly accelerating training speed of distributed AI workloads.

Published on by Anket Sah

One of the biggest trends in machine learning is the development of large transformer models like BERT and diffusion models like stable diffusion. These large ...

Published on by Lauren Watkins

Available October 2022, the NVIDIA® GeForce RTX 4090 is the newest GPU for gamers, creators, students, and researchers. In this post, we benchmark RTX 4090 to ...

Published on by Chuan Li

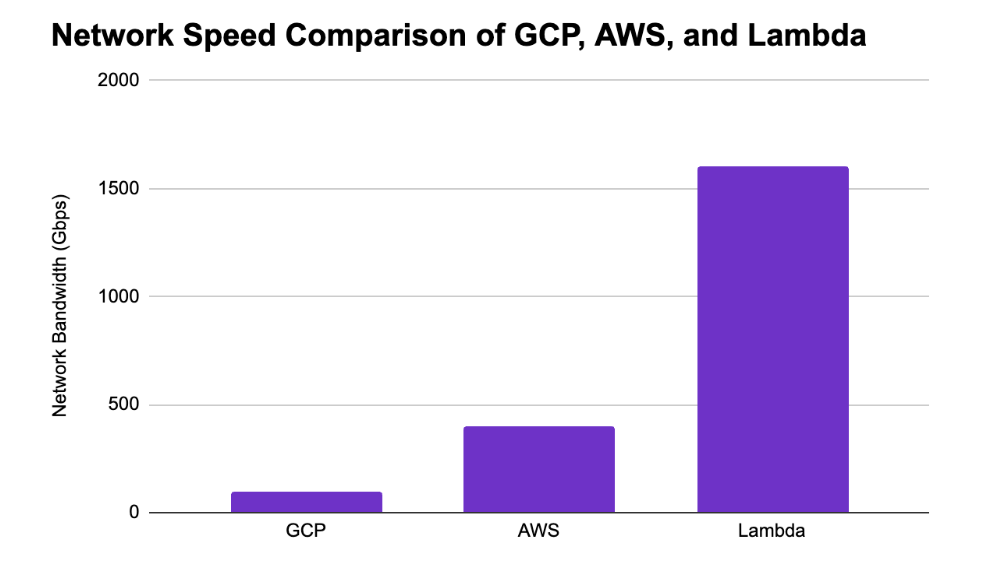

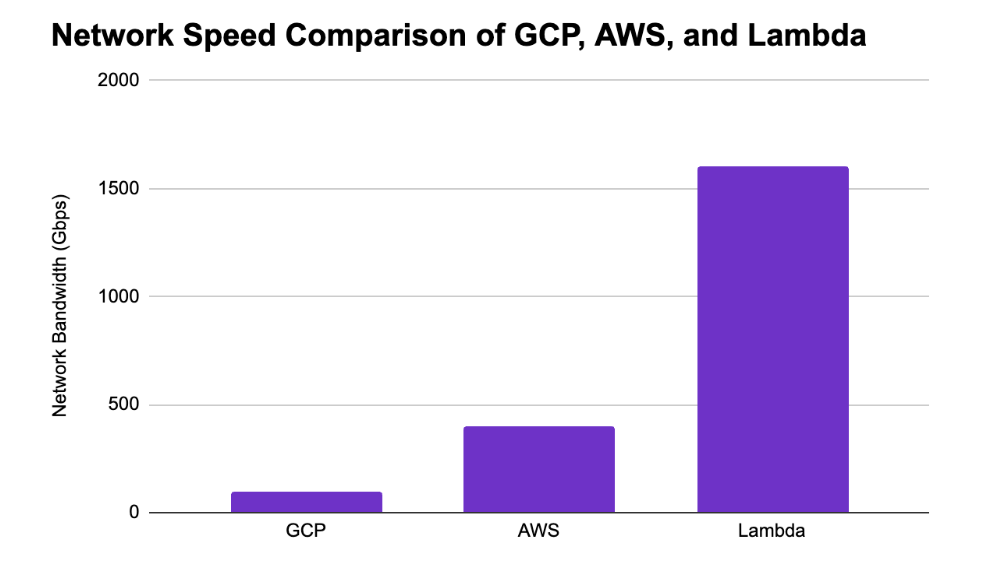

While we continue to add more NVIDIA A100s to our cloud, the Lambda GPU cloud team is also making upgrades and improvements to make our cloud platform easier ...

Published on by Cody Brownstein

Create a cloud account instantly to spin up GPUs today or contact us to secure a long-term contract for thousands of GPUs