1, 2 & 4-GPU NVIDIA Quadro RTX 6000 Lambda GPU Cloud Instances

Starting today you can now spin up virtual machines with 1, 2, or 4 NVIDIA® Quadro RTX™ 6000 GPUs on Lambda's GPU cloud. We’ve built this new general purpose ...

Published on by Remy Guercio

Starting today you can now spin up virtual machines with 1, 2, or 4 NVIDIA® Quadro RTX™ 6000 GPUs on Lambda's GPU cloud. We’ve built this new general purpose ...

Published on by Stephen Balaban

Introducing the Lambda Echelon Lambda Echelon is a GPU cluster designed for AI. It comes with the compute, storage, network, power, and support you need to ...

Published on by Michael Balaban

Check out the discussion on Reddit 288 upvotes, 95 comments

Published on by Michael Balaban

The goal of this post is to guide your thinking on GPT-3. This post will:

Published on by Chuan Li

by Chuan Li, PhD

Published on by Stephen Balaban

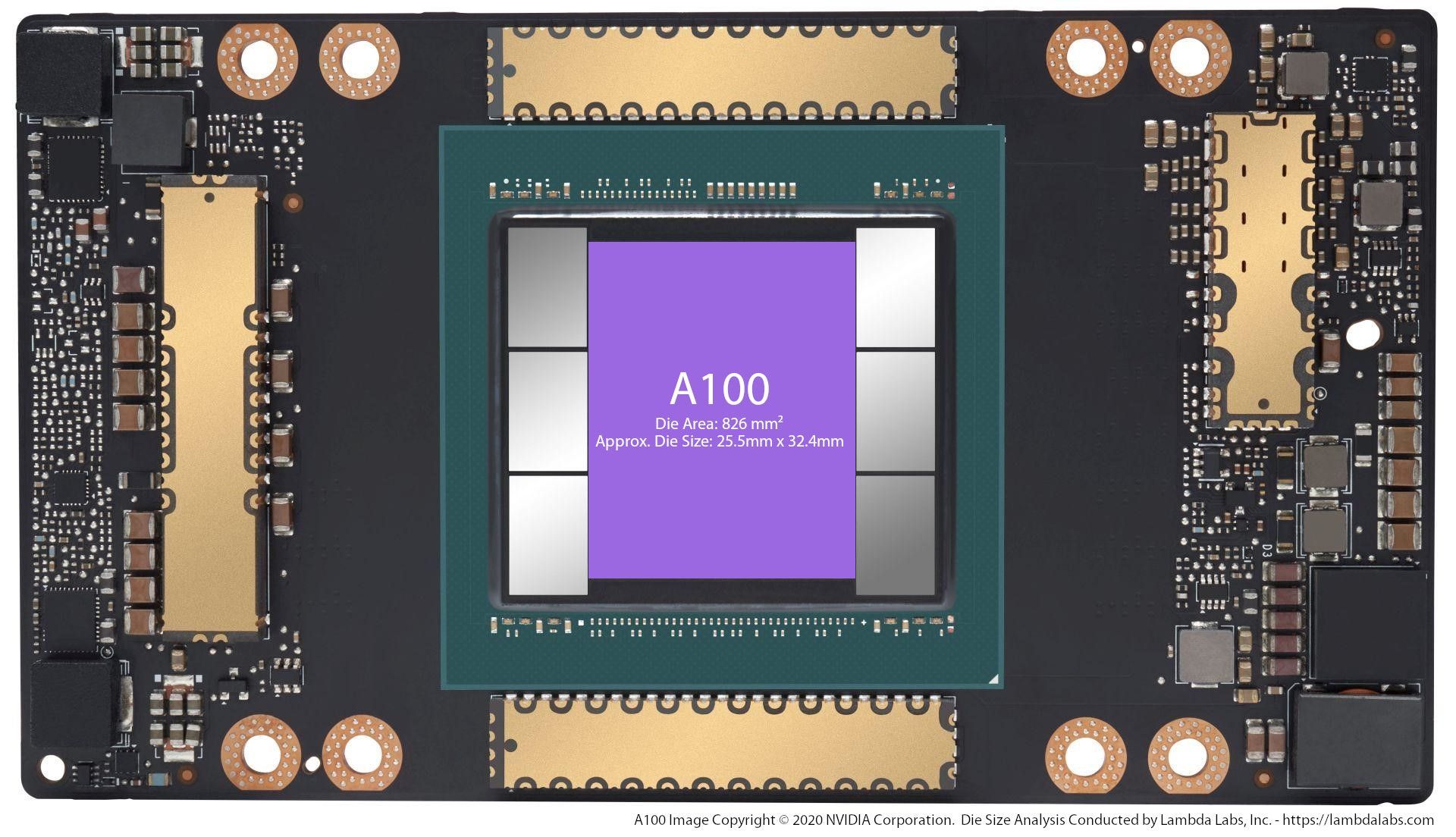

Lambda customers are starting to ask about the new NVIDIA A100 GPU and our Hyperplane A100 server. The A100 will likely see the large gains on models like ...

Published on by Remy Guercio

With most (if not all) machine learning and deep learning researchers and engineers now working from home due to COVID-19, we’ve seen a massive increase in the ...

Published on by Remy Guercio

You can now use Lambda Cloud High Speed Filesystems to permanently store data on the Lambda Cloud. Click here to learn how to use Lambda Cloud Filesystems.

Published on by Remy Guercio

This guide will walk you through the process of launching a Lambda Cloud GPU instance and using SSH to log in. While we offer both a Web Terminal and Jupyter ...

Published on by Stephen Balaban

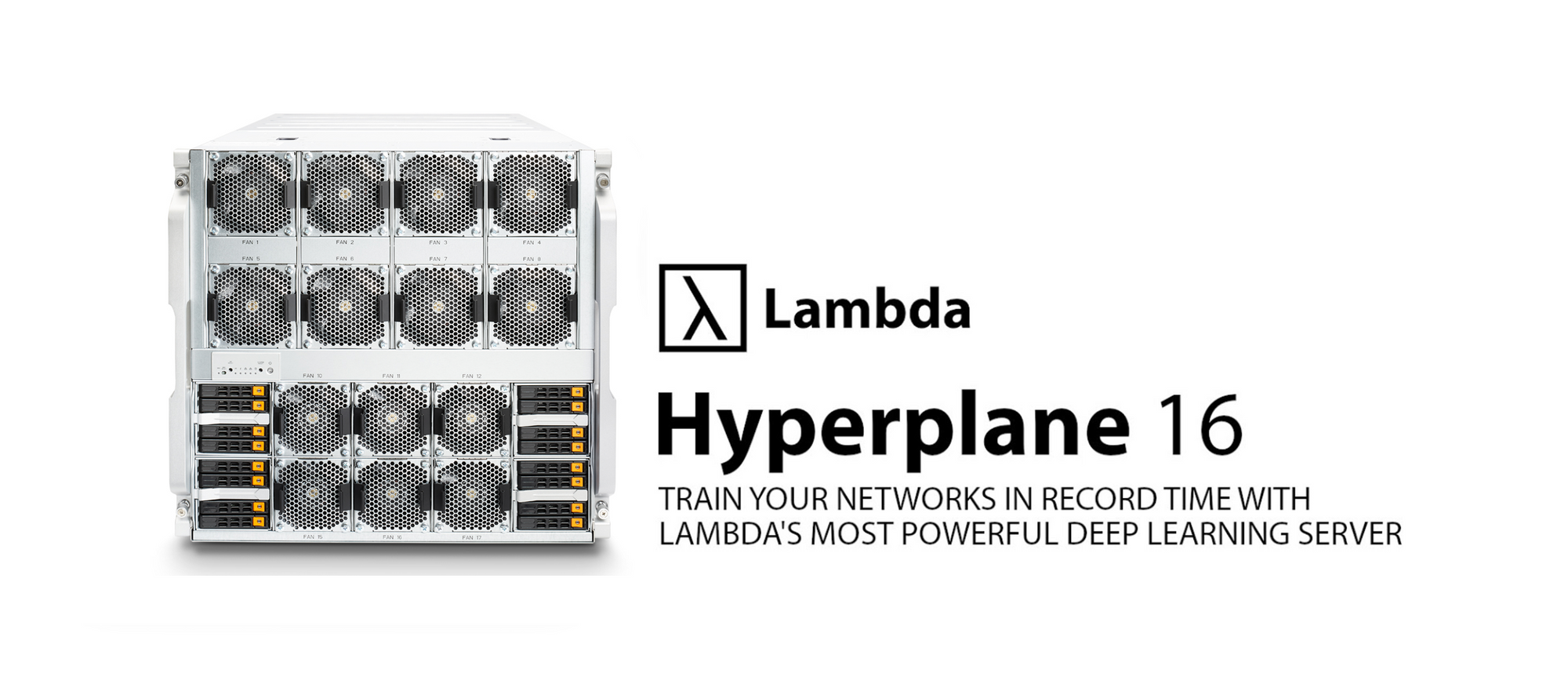

In this post we'll walk through using our Total Cost of Ownership (TCO) calculator to examine the cost of a variety of Lambda Hyperplane-16 clusters. We have ...

Published on by Michael Balaban

State-of-the-art (SOTA) deep learning models have massive memory footprints. Many GPUs don't have enough VRAM to train them. In this post, we determine which ...

Published on by Chuan Li

by Chuan Li, PhD

Published on by Stephen Balaban

The desired end-state of this tutorial, a running subnet manager on your switch. This tutorial will walk you through the steps required to set up a Mellanox ...

Published on by Chuan Li

TensorFlow 2 is now live! This tutorial walks you through the process of building a simple CIFAR-10 image classifier using deep learning. In this tutorial, we ...

Published on by Chuan Li

This blog will walk you through the steps of setting up a Horovod + Keras environment for multi-GPU training.