Be First, Scale Fast - NVIDIA Blackwell GPU Clusters Now Live on Lambda

-1.png)

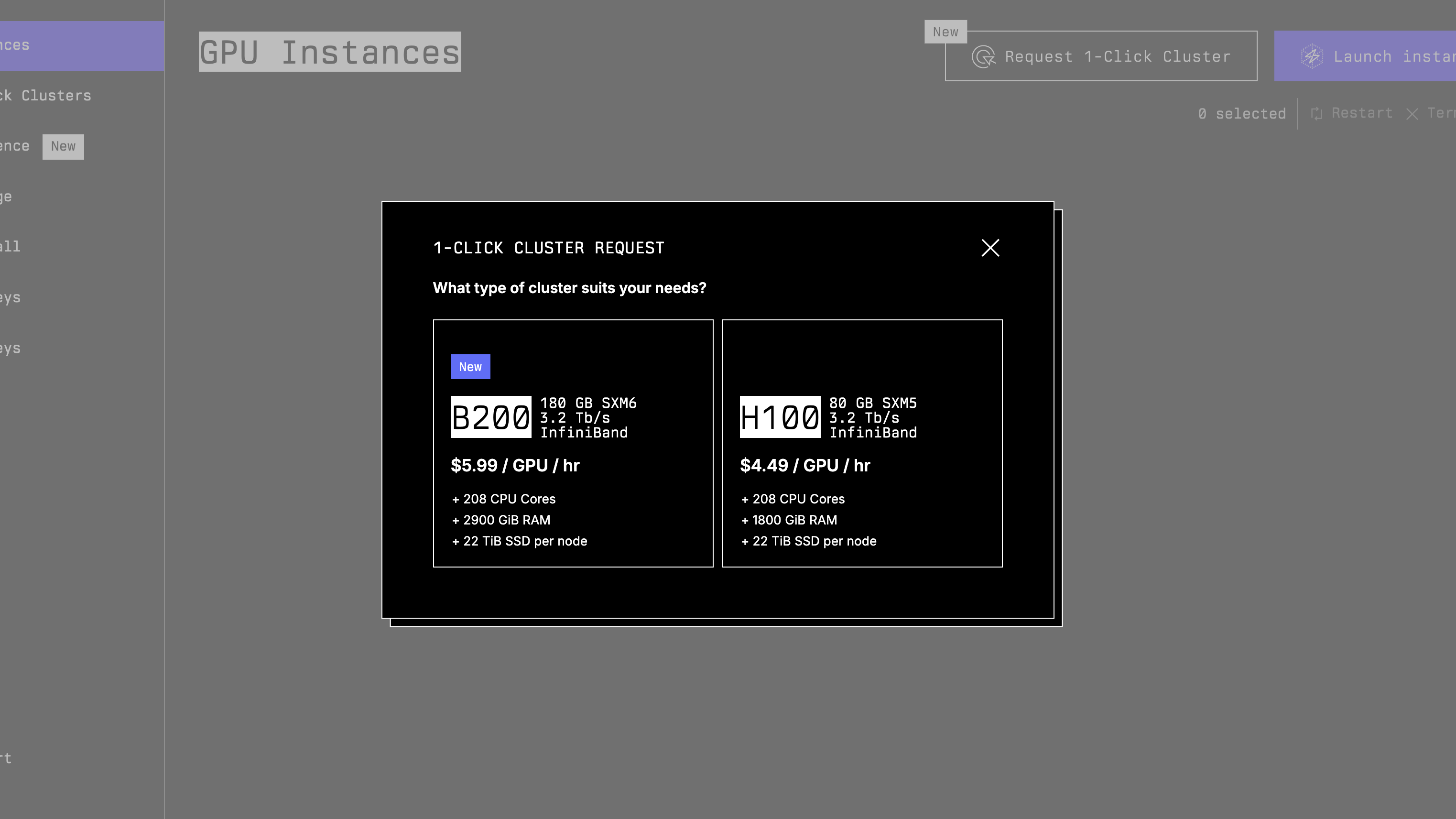

1-Click Cluster with NVIDIA HGX B200

NVIDIA Blackwell architecture is live on Lambda Cloud with our 1-Click Clusters now featuring NVIDIA HGX B200 in addition to our existing NVIDIA HGX H100 option. AI developers can now access NVIDIA Blackwell GPUs to power leading edge workloads like reinforcement learning and reasoning AI with technological breakthroughs like second-generation Transformer Engine, fifth-generation NVIDIA NVLink interconnect and a fully-optimized AI software stack

Lambda’s 1-Click Clusters are designed to simplify the deployment of multi-node GPU clusters, enabling seamless scaling for distributed training and inference tasks. These clusters are interconnected with NVIDIA Quantum-2 InfiniBand with an aggregate throughput of 3.2Tb/s, ensuring low-latency and high-bandwidth communication between nodes.

The addition of NVIDIA HGX B200 to Lambda Cloud provides a significant leap in performance, offering:

- Up to 3x training performance: Accelerate the development of complex models and reduce time-to-insight.

- Up to 15x inference performance: Deploy real-time applications with enhanced responsiveness and efficiency.

Available now: NVIDIA HGX B200 2-Week Clusters

To provide early adopters with an opportunity to evaluate the capabilities of the NVIDIA Blackwell architecture, we have launched 16 NVIDIA Blackwell GPU clusters for up to two-week periods per organization. This first-to-market access allows teams to test and optimize their workflows on the latest hardware.

Reserve your 1-Click Cluster with NVIDIA HGX B200 now through the Lambda Cloud dashboard.

NVIDIA Blackwell Ultra Platform Coming to Lambda Cloud

Lambda will be one of the first NVIDIA Cloud Partners to deploy the latest NVIDIA Blackwell Ultra platform announced at the NVIDIA GTC global AI conference. The NVIDIA GB300 NVL72 system will be available through Lambda’s On-Demand & Reserved Cloud.

NVIDIA HGX B300 NVL16 and GB300 NVL72, based on the NVIDIA Blackwell Ultra architecture, are built for the age of reasoning with 7X more AI compute than NVIDIA Hopper generation, increased memory to support growing models and MoE architectures, and networking platform integration with NVIDIA Quantum-X800 InfiniBand with double the bandwidth of previous generations. HGX B300 NVL16 comes with 2.3TB of HBM3e and a 16-way NVLink domain while GB300 NVL72 comes with 20TB of HBM3e memory and a 72-GPU NVLink domain.

Blackwell Ultra provides leading performance for AI reasoning, agentic AI, and large scale distributed training and inference workloads.

1-Click Cluster with NVIDIA Blackwell Ultra

Lambda’s 1-Click Cluster with Blackwell Ultra will provide on-demand access to interconnected clusters for large scale AI training and inference. Featuring NVIDIA Quantum-X800 InfiniBand networking with 6.4Tb/s of bandwidth between nodes in a fully non-blocking rail-optimized design for the absolute highest performance network. Lambda’s 1-Click Clusters with NVIDIA Blackwell Ultra scale from 16-GPUs to thousands and are paired with high-performance storage and optional Kubernetes or Slurm orchestration.

Private Cloud with Blackwell Ultra

Lambda’s Private Cloud with NVIDIA Blackwell Ultra GPUs offers dedicated large-scale clusters with GPUs, storage, and networking designed for AI workloads. Private cloud environments can scale to 10k+ GPUs and leverage only the latest and greatest infrastructure, built for the next generation of LLMs and other large-scale models.

Inception

As part of the NVIDIA Cloud Partner (NCP) Program, Lambda is providing companies in the NVIDIA Inception and NVIDIA Connect programs with an exclusive $7,500 cloud credit offer, which can be used toward on-demand NVIDIA GPU instances, as well as inferencing the best open-source models through a serverless API endpoint.

Inception and Connect companies can start developing immediately with no rate limits on the inference endpoint, no quota to access the best GPUs on-demand, all open-source AI tools pre-installed, and no egress or ingress fee. The offer is available in NVIDIA’s brand new portal for Inception and Connect members.

Lambda Hardware with NVIDIA Blackwell

At Lambda, we are always striving to push the boundaries of performance, efficiency, and scalability in our GPU infrastructure offerings. Today, we are excited to announce the integration of the new NVIDIA RTX PRO™ 6000 Blackwell Server and Workstation Edition GPUs into our product offerings. The cutting-edge NVIDIA Blackwell architecture brings a new level of power and versatility to AI workloads, high-performance computing (HPC), and graphics-intensive applications.

Scalar GPU Server with NVIDIA RTX PRO 6000 Blackwell Server Edition

With 96 GB of ultra-fast GDDR7 memory, the NVIDIA RTX PRO 6000 Blackwell Server Edition is the new ultimate universal data center GPU, joining the rest of NVIDIA’s Blackwell architecture GPU lineup. Lambda’s Scalar GPU server will support up to eight of the new NVIDIA RTX PRO 6000 Blackwell GPUs, which delivers exceptional leadership performance for all of the most demanding generative AI, graphics, and video applications. With a total system capacity of up to 768 GB of GDDR7 memory, these Blackwell-powered servers are ideal for large language models (LLMs), generative AI, and other deep learning applications.

Vector Pro GPU Workstation with NVIDIA RTX PRO Blackwell Workstation Edition

Coming to the Vector Pro product line will be the new lineup of NVIDIA Blackwell workstation GPUs including the NVIDIA RTX PRO 6000 Blackwell Workstation Edition. These new GPUs feature the revolutionary NVIDIA Blackwell architecture for unparalleled performance across the board in a wide variety of workloads. Offering up to 96 GB of high speed GDDR7 memory, latest generation Tensor and RT cores, the NVIDIA RTX PRO Blackwell Workstation Edition GPUs are the ultimate way to supercharge your workstation. Vector Pro workstations can be configured with up to four Blackwell workstation GPUs, the Vector Pro is configurable and ready to take you on your AI journey regardless of where you might be.

New NVIDIA GB300 Desktop AI Supercomputer

Lambda is proud to be able to partner with NVIDIA and offer the new NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip in our Vector Pro system. The new Grace Blackwell platform brings the ARM architecture to deskside systems and offers an unprecedented, massive 784GB of coherent memory under your desk. The Grace Blackwell Ultra GPU, connected to the Grace CPU via the NVLink-C2C interconnect, comes with the latest NVIDIA CUDA cores and fifth-generation Tensor Cores while optimizing system communication and performance. The NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip will be coming to Vector Pro later this year.

What’s next with NVIDIA Blackwell GPUs & Lambda

Our collaboration with NVIDIA enables Lambda to be one of the first to market with NVIDIA Blackwell GPUs, whether in our cloud or in your data center. Lambda customers, from AI startups to the Fortune 500, will continue to have access to the fastest and most effective infrastructure to power their largest and most demanding AI training projects. Reach out to our team to learn more.